Start the filebeat service service filebeat startħ. Make # for all other outputs and in the host’s field, specify the IP address of the logstash VMĦ. Here filebeat will ship all the logs inside the /var/log/ to logstash # - Logstash output - output.logstash: # The Logstash hosts hosts: enabled: true # Paths that should be crawled and fetched. type: log # Change to true to enable this prospector configuration. # Below are the prospector specific configurations. Most options can be set at the prospector level, so # you can use different prospectors for various configurations. for that Edit /etc/filebeat/filebeat.yml file filebeat.prospectors: # Each - is a prospector. Configure the filebeat configuration file to ship the logs to logstash.

Parse apache logs filebeats install#

install filebeat using dpkg sudo dpkg -i filebeat-6.2.bĥ.

Parse apache logs filebeats download#

Download the filebeat using curl curl -L -O Ĥ. Start the service sudo service apache2 startģ. Here we are shipping to a file with hostname and timestamp.īefore getting started the configuration, here I am using Ubuntu 16.04 in all the instances.Ģ. Input generates the events, filters modify them, and output ships them elsewhere. Any type of event can be modified and transformed with a broad array of input, filter and output plugins. Logstash: Logstash is used to collect the data from disparate sources and normalize the data into the destination of your choice. Harvesters will read each file line by line, and sends the content to the output and also the harvester is responsible for opening and closing of the file. Inputs are responsible for managing the harvesters and finding all sources from which it needs to read. Filebeat works based on two components: prospectors/inputs and harvesters. Filebeat agent will be installed on the server, which needs to monitor, and filebeat monitors all the logs in the log directory and forwards to Logstash.

In VM 1 and 2, I have installed Web server and filebeat and In VM 3 logstash was installed.įilebeat: Filebeat is a log data shipper for local files. Here I am using 3 VM’s/instances to demonstrate the centralization of logs. That’s the power of the centralizing the logs. It will pretty easy to troubleshoot and analyze.

Now let’s suppose if all the logs are taken from every system and put in a single system or server with their time, date, and hostname. In case, we had 10,000 systems then, it’s pretty difficult to manage that, right? If we had 100 or 1000 systems in our company and if something went wrong we will have to check every system to troubleshoot the issue. For example: if the webserver logs will contain on apache.log file, auth.log contains authentication logs. The logs are generated in different files as per the services. Logs also carry timestamp information, which will provide the behavior of the system over time. Log analysis helps to capture the application information and time of the service, which can be easy to analyze. The common use case of the log analysis is: debugging, performance analysis, security analysis, predictive analysis, IoT and logging. By analyzing the logs we will get a good knowledge of the working of the system as well as the reason for disaster if occurred. The logs are a very important factor for troubleshooting and security purpose. So the logs will vary depending on the content. For Example, the log generated by a web server and a normal user or by the system logs will be entirely different. The differences between the log format are that it depends on the nature of the services. In every service, there will be logs with different content and a different format. Logs give information about system behavior. Optional: If this is the first time you go to the Beats Data Shippers page, view the information displayed in the message that appears and click OK to authorize the system to create a service-linked role for your account.LOG Centralization: Using Filebeat and Logstash.In the left-side navigation pane, click Beats Data Shippers.In the top navigation bar, select a region.

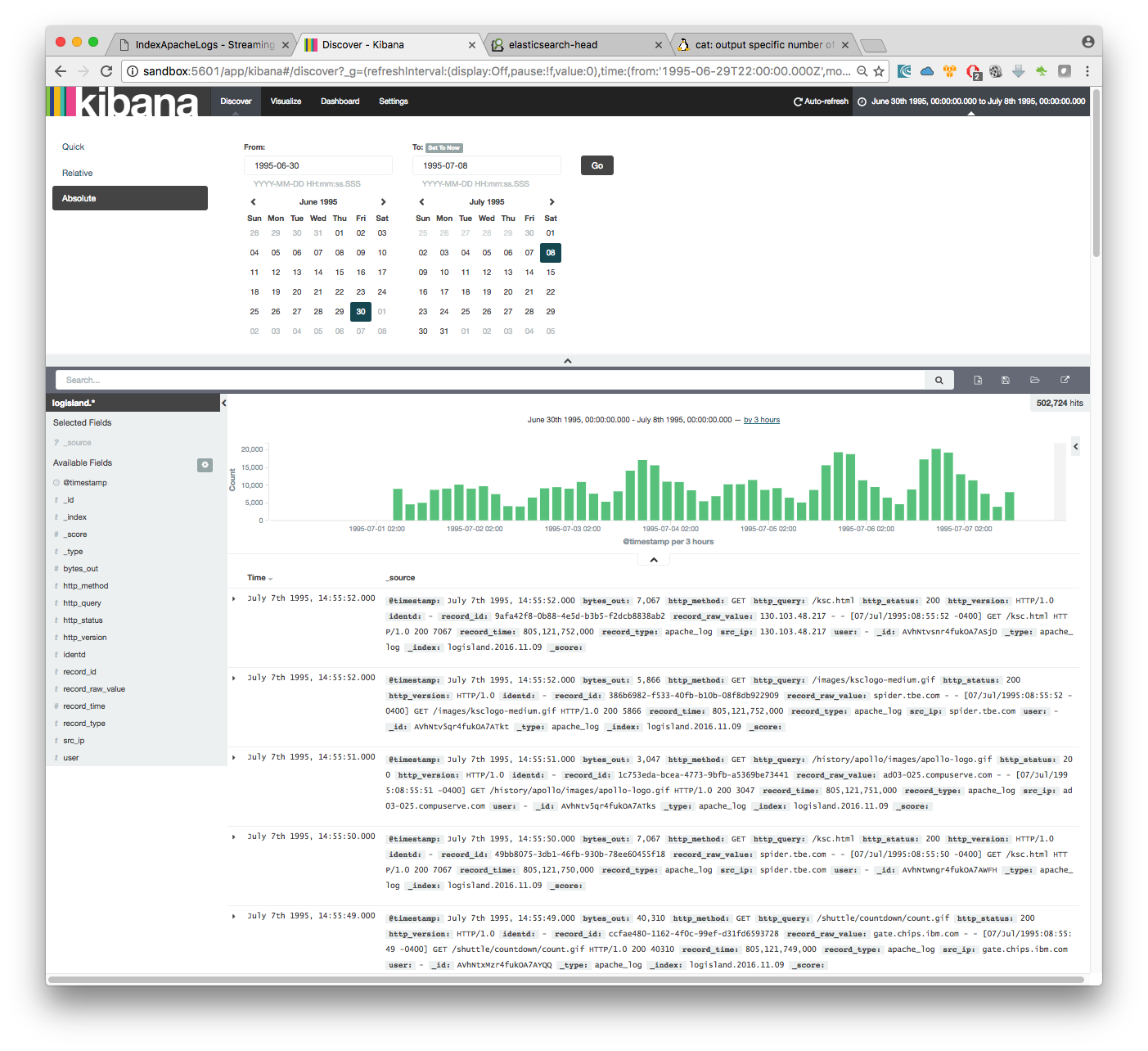

Tool, we recommend that you define JSON as the format of the log data in the nfįile. To facilitate the analytics and display of Apache log data by using a visualization

0 kommentar(er)

0 kommentar(er)